The invisible stack and the rise of Agentic Middleware

For the past three years, enterprise AI conversations have been dominated by one question: "How do we prompt it?" "How do we teach our people to talk to the machine?"

We hired Prompt Engineers, organized "generative AI bootcamps," and filled our Slack channels with tips on how to coax a better response out of an LLM.

But as we enter 2026, that era is ending. The "Chat" interface, once hailed as the revolution, is starting to look like a transitional fossil.

The most powerful AI of 2026 isn't the one you talk to. It's the one you never see. We have moved past the Human-in-the-Loop (HITL) era of constant handholding and entered the era of Human-on-the-Loop (HOTL).

Why prompting alone is no longer enough

Prompt engineering emerged as a necessary skill during AI's adolescence. When models were brittle, users had to compensate with clever phrasing, context stuffing, and trial-and-error conversations.

In mature AI systems, intelligence shifts away from the interface and into the infrastructure. The most valuable AI in 2026 will not ask, "What would you like me to do?" It will infer intent from behavior, context, policy, and history - then act.

This mirrors every major computing transition:

- We stopped configuring servers manually once cloud abstractions matured

- We stopped managing files once apps became workflow-centric

- We stopped "searching the web" once feeds became predictive

AI is now following the same trajectory.

The obsession with prompting is fading because decision-making is moving into the background, embedded directly into operating systems, enterprise software, and business processes.

The rise of the Invisible Stack

In 2024, AI was a destination. You went to a website or opened an app to "do AI." In 2026, AI has become an environmental layer - an Invisible Stack baked directly into the OS and the enterprise ecosystem.

This Invisible AI Stack sits between systems, not users:

- Between your email and calendar

- Between your CRM and finance tools

- Between your bank account, invoices, and compliance rules

- Between your supply chain signals and procurement actions

We are seeing a decline in the overall focus on prompting because the "Intent Gap" has been closed. Instead of describing a task to an AI, the AI observes your workflows, anticipates the objective, and prepares the result. The interface is no longer a blinking cursor; it is a notification that says: "I've reorganized your Q3 travel to align with your new budget constraints and updated the stakeholders. Click here to confirm."

This is the transition from Generative AI (making things) to Agentic AI (doing things).

From In-the-Loop to On-the-Loop

To understand the shift, we must look at the control model.

- Human-In-the-Loop: The AI cannot proceed without a human command at every stage. This is a bottleneck. It's labor-intensive and creates "prompt fatigue."

- Human-On-the-Loop: The AI operates autonomously within a set of guardrails. It executes research, data synthesis, and cross-platform coordination. The human role shifts from operator to overseer.

In 2026, AI agent acts as Agentic Middleware. It sits between disparate SaaS silos - ERP, CRM, and private banking data; knitting together workflows that previously required a four-person team.

For a CEO, this means the AI isn't just writing an email. It is identifying a supply chain bottleneck in the ERP, cross-referencing it with vendor contracts, drafting a mitigation strategy, and presenting you with three options to approve. You aren't in the loop; you are on the loop, providing the final executive Yes.

The tech making this possible

This shift isn't just a UI preference; it's driven by three specific architectural breakthroughs:

- LAMs (Large Action Models): While LLMs understand language, LAMs understand interfaces. These models are trained to navigate software the way a human does, clicking buttons and moving data between apps without needing an API for every single connection.

- Memory persistence & context windows: 2026-era agents possess long-term organizational memory. They don't start every session with a blank slate; they understand your company's specific tone, your past decisions, and your shifting priorities.

- Autonomous orchestration layers: We have moved toward multi-agent systems. One agent identifies the problem, other gathers data, and a third checks for compliance. This internal checks and balances system reduces hallucinations and allows the AI to self-correct before the human ever sees the output.

Use cases

| Business function | Human-in-the-Loop | Human-on-the-Loop | Real-world action |

|---|---|---|---|

| Finance & Fraud | Analysts manually prompt AI to summarize high-risk transactions for investigation. | Systems like JPMorgan Chase's OmniAI autonomously flag, pause, and draft reports on anomalies. | Agentic Middleware manages the investigation workflow; the human auditor only provides a final "Yes/No" on the proposed resolution. |

| Procurement | Prompt a chatbot to negotiate terms with a single vendor you've identified. | Walmart uses AI agents to autonomously negotiate thousands of "tail-end" supplier contracts simultaneously. | Large Action Models analyze competitor costs and inventory in real-time to close deals within set budget guardrails without manual intervention. |

| Healthcare | Doctors ask AI to summarize patient charts or check for drug-to-drug interactions. | Clinical support tools provide a "First Draft" of the patient's entire care plan before the doctor enters the room. | Persistent Context cross-references the patient's history and real-time vitals to suggest a treatment, allowing the doctor to focus strictly on validation and patient care. |

| Supply Chain | Managers prompt AI to find alternative shipping routes after a delay is reported. | Amazon & Walmart logistics agents monitor global port data and weather to reroute shipments before a bottleneck occurs. | Autonomous orchestration triggers secondary contracts and updates warehouse schedules; the Supply Chain Director oversees the Strategic Resilience of the entire network. |

The HOTL governance framework: 2026

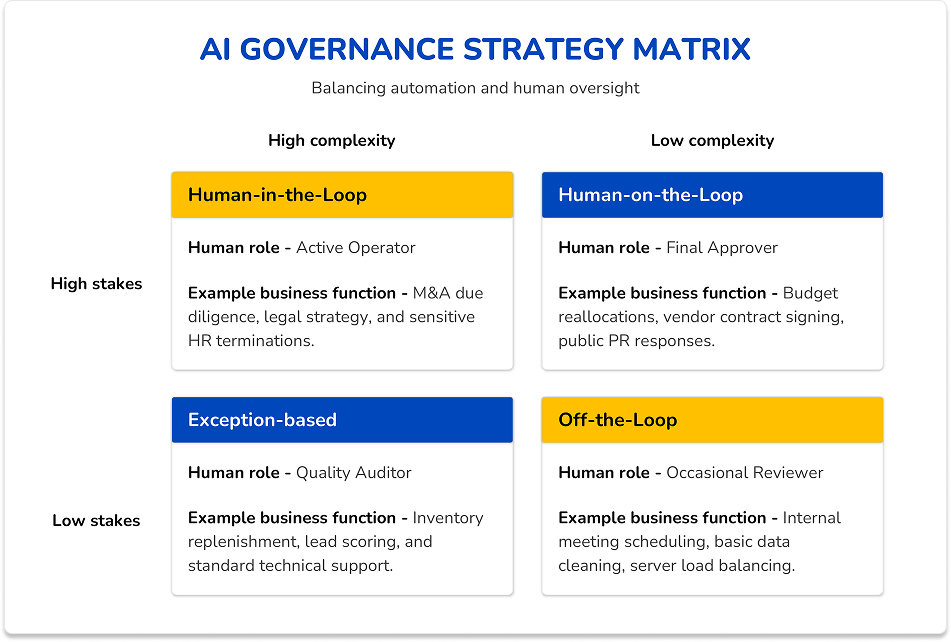

The objective of this framework is to assign every AI-driven process a Control Tier. This prevents the two greatest risks of the agentic era: human bottlenecks in low-risk tasks and runaway autonomy in high-risk tasks.

1. The Autonomy matrix

We categorize business functions based on complexity (how many variables are involved) and the stakes (the cost of a mistake).

2. Standard operating procedures for The Loop

For any process designated as Human-on-the-Loop, the following governance pillars must be established:

The Veto Protocol

Every autonomous agent must have a standardized Pause Point. Before a final action is taken such as sending a payment or publishing a report, the agent populates a Decision Summary. This summary must answer three questions for the human supervisor:

- What action am I taking?

- Why is this the optimal path? (The Logic Chain)

- What is the projected impact?

Algorithmic Guardrails (the safety fence)

Instead of telling the AI how to do the work, leadership defines the boundaries. For example:

- Financial guardrail: The agent may reallocate budget between marketing channels autonomously, provided the shift does not exceed $5,000 per day.

- Compliance guardrail: All outbound vendor communications must be screened against the latest GDPR update before being queued for approval.

The Audit Trail

In the Invisible Stack era, the process is hidden, so the trail must be transparent. Every HOTL system must maintain a timestamped log of which data points influence a decision. This allows for Post-Action Review (PAR), where managers review a week's worth of autonomous actions to tune the agent's future behavior.

3. Implementation roadmap

- Inventory the silos: Identify where Agentic Middleware can bridge existing gaps (e.g., your CRM talking to your Billing system).

- Assign tiering: Use the Autonomy Matrix to label these workflows. Start with Tier 3 (Operational) to build trust.

- Define approval portals: Create a single Command Center where executives can see all pending On-the-loop approvals in one place, rather than chasing notifications across multiple apps.

- Monitor drift: Quarterly reviews are essential to ensure the AI's autonomous logic hasn't drifted from the company's evolving strategic goals.

Why C-suite leaders should care now

For the C-Suite, the Year of Human-on-the-Loop requires a fundamental mindset shift.

1. Decision velocity becomes a strategic asset

When AI handles 90% of operational decisions, leadership can focus on:

- Direction

- Risk

- Ethics

- Long-term strategy

HOTL models dramatically reduce cognitive load while preserving accountability.

2. Governance becomes programmable

Policies, thresholds, and compliance rules become machine-interpretable constraints rather than PDF documents. Executives stop reviewing processes and start governing outcomes.

3. Trust replaces control

Human-on-the-Loop systems require leaders to shift from direct control to trust-based supervision, supported by transparency and auditability. This is not a loss of power; it is leverage.

Is your organization ready for Human-On-The-Loop?

If you find yourself nodding to one or more of the points below, you're exactly the kind of enterprise most likely to succeed with an agentic AI operating model and this is where Torry Harris can help accelerate your transition:

- Your teams are drowning in manual hand-offs between systems - ERP, CRM, finance, legacy, SaaS slowing down innovation and decision velocity.

- You have invested in AI pilots but they rarely scale beyond point solutions because data and systems aren't integrated.

- Your leadership wants both governance and autonomy, not more stop-gaps and alerts.

- You have strategic digital initiatives (API, marketplace, cloud migration, GCC, transformation) stalled by technical debt.

- You realize that AI should operate across systems, not just within a chatbot window but the path to get there feels unclear.

|

Shreya KapoorSenior Content Strategist |